The less integration there is to do, the less unknown time there is before a new release is ready. Feature Branching helps by pushing this integration work to individual feature streams, so that, if left alone, a stream can push to mainline as soon as the feature is ready.

But that left alone point is important. If anyone else pushes to mainline, then we introduce some integration work before the feature is done. Because the branches are isolated, a developer working on one branch doesn't have much visibility about what other features may push, and how much work would be involved to integrate them.

While there is a danger that high priority features can face integration delays, we can manage this by preventing pushes of lower-priority features. Continuous Integration effectively eliminates delivery risk. The integrations are so small that they usually proceed without comment.

An awkward integration would be one that takes more than a few minutes to resolve. The very worst case would be conflict that causes someone to restart their work from scratch, but that would still be less than a day's work to lose, and is thus not going to be something that's likely to trouble a board of stakeholders.

Furthermore we're doing integration regularly as we develop the software, so we can face problems while we have more time to deal with them and can practice how to resolve them. Even if a team isn't releasing to production regularly, Continuous Integration is important because it allows everyone to see exactly what the state of the product is.

There's no hidden integration efforts that need to be done before release, any effort in integration is already baked in. I've not seen any serious studies that measure how time spent on integration matches the size of integrations, but my anecdotal evidence strongly suggests that the relationship isn't linear.

If there's twice as much code to integrate, it's more likely to be four times as long to carry out the integration. It's rather like how we need three lines to fully connect three nodes, but six lines to connect four of them.

Integration is all about connections, hence the non-linear increase, one that's reflected in the experience of my colleagues. In organizations that are using feature branches, much of this lost time is felt by the individual. Several hours spent trying to rebase on a big change to mainline is frustrating.

A few days spent waiting for a code review on a finished pull request, which another big mainline change during the waiting period is even more frustrating.

Having to put work on a new feature aside to debug a problem found in an integration test of feature finished two weeks ago saps productivity.

When we're doing Continuous Integration, integration is generally a non-event. I pull down the mainline, run the build, and push. If there is a conflict, the small amount of code I've written is fresh in my mind, so it's usually easy to see.

The workflow is regular, so we're practiced at it, and we're incentives to automate it as much as possible. Like many of these non-linear effects, integration can easily become a trap where people learn the wrong lesson.

A difficult integration may be so traumatic that a team decides it should do integrations less often, which only exacerbates the problem in the future. What's happening here is that we are seeing much closer collaboration between the members of the team.

Should two developers make decisions that conflict, we find out when we integrate. So the less time between integrations, the less time before we detect the conflict , and we can deal with the conflict before it grows too big. With high-frequency integration, our source control system becomes a communication channel, one that can communicate things that can otherwise be unsaid.

Bugs - these are the nasty things that destroy confidence and mess up schedules and reputations. Bugs in deployed software make users angry with us. Bugs cropping up during regular development get in our way, making it harder to get the rest of the software working correctly.

Continuous Integration doesn't get rid of bugs, but it does make them dramatically easier to find and remove. This is less because of the high-frequency integration and more due to the essential introduction of self-testing code.

Continuous Integration doesn't work without self-testing code because without decent tests, we can't keep a healthy mainline. Continuous Integration thus institutes a regular regimen of testing.

If the tests are inadequate, the team will quickly notice, and can take corrective action. If a bug appears due to a semantic conflict, it's easy to detect because there's only a small amount of code to be integrated. Frequent integrations also work well with Diff Debugging , so even a bug noticed weeks later can be narrowed down to a small change.

Bugs are also cumulative. The more bugs we have, the harder it is to remove each one. This is partly because we get bug interactions, where failures show as the result of multiple faults - making each fault harder to find.

It's also psychological - people have less energy to find and get rid of bugs when there are many of them. Thus self-testing code reinforced by Continuous Integration has another exponential effect in reducing the problems caused by defects.

This runs into another phenomenon that many people find counter-intuitive. Seeing how often introducing a change means introducing bugs, people conclude that to have high reliability software they need to slow down the release rate.

This was firmly contradicted by the DORA research program led by Nicole Forsgren. They found that elite teams deployed to production more rapidly, more frequently, and had a dramatically lower incidence of failure when they made these changes. Most teams observe that over time, codebases deteriorate.

Early decisions were good at the time, but are no longer optimal after six month's work. But changing the code to incorporate what the team has learned means introducing changes deep in the existing code, which results in difficult merges which are both time-consuming and full of risk.

Everyone recalls that time someone made what would be a good change for the future, but caused days of effort breaking other people's work. Given that experience, nobody wants to rework the structure of existing code, even though it's now awkward for everyone to build on, thus slowing down delivery of new features.

Refactoring is an essential technique to attenuate and indeed reverse this process of decay. A team that refactors regularly has a disciplined technique to improve the structure of a code base by using small, behavior-preserving transformations of the code.

These characteristics of the transformations greatly reduce their chances of introducing bugs, and they can be done quickly, especially when supported by a foundation of self-testing code. Applying refactoring at every opportunity, a team can improve the structure of an existing codebase, making it easier and faster to add new capabilities.

But this happy story can be torpedoed by integration woes. A two week refactoring session may greatly improve the code, but result in long merges because everyone else has been spending the last two weeks working with the old structure.

This raises the costs of refactoring to prohibitive levels. Frequent integration solves this dilemma by ensuring that both those doing the refactoring and everyone else are regularly synchronizing their work. When using Continuous Integration, if someone makes intrusive changes to a core library I'm using, I only have to adjust a few hours of programming to these changes.

If they do something that clashes with the direction of my changes, I know right away, so have the opportunity to talk to them so we can figure out a better way forward. So far in this article I've raised several counter-intuitive notions about the merits of high-frequency integration: that the more often we integrate, the less time we spend integrating, and that frequent integration leads to less bugs.

Here is perhaps the most important counter-intuitive notion in software development: that teams that spend a lot of effort keeping their code base healthy deliver features faster and cheaper.

Time invested in writing tests and refactoring delivers impressive returns in delivery speed, and Continuous Integration is a core part of making that work in a team setting. How long before we can make this live?

Continuous Integration allows us to maintain a Release-Ready Mainline , which means the decision to release the latest version of the product into production is purely a business decision. If the stakeholders want the latest to go live, it's a matter of minutes running an automated pipeline to make it so.

This allows the customers of the software greater control of when features are released, and encourages them to collaborate more closely with the development team. Continuous Integration and a Release-Ready Mainline removes one of the biggest barriers to frequent deployment.

Frequent deployment is valuable because it allows our users to get new features more rapidly, to give more rapid feedback on those features, and generally become more collaborative in the development cycle.

This helps break down the barriers between customers and development - barriers which I believe are the biggest barriers to successful software development. All those benefits sound rather juicy.

But folks as experienced or cynical as I am are always suspicious of a bare list of benefits. Few things come without a cost, and decisions about architecture and process are usually a matter of trade-offs.

But I confess that Continuous Integration is one of those rare cases where there's little downside for a committed and skillful team to utilize it.

The cost imposed by sporadic integration is so great, that almost any team can benefit by increasing their integration frequency. There is some limit to when the benefits stop piling up, but that limit sits at hours rather than days, which is exactly the territory of Continuous Integration.

The interplay between self-testing code, Continuous Integration, and Refactoring is particularly strong. We've been using this approach for two decades at Thoughtworks, and our only question is how to do it more effectively - the core approach is proven.

But that doesn't mean that Continuous Integration is for everyone. Those two adjectives indicate the contexts where Continuous Integration isn't a good fit. A good counter-example to this is a classical open-source project, where there is one or two maintainers and many contributors.

In such a situation even the maintainers are only doing a few hours a week on the project, they don't know the contributors very well, and don't have good visibility for when contributors contribute or the standards they should follow when they do.

This is the environment that led to a feature branch workflow and pull-requests. In such a context Continuous Integration isn't plausible, although efforts to increase the integration frequency can still be valuable.

Continuous Integration is more suited for team working full-time on a product, as is usually the case with commercial software. But there is much middle ground between the classical open-source and the full-time model.

We need to use our judgment about what integration policy to use that fits the commitment of the team. The second adjective looks at the skill of the team in following the necessary practices. If a team attempts Continuous Integration without a strong test suite, they will run into all sorts of trouble because they don't have a mechanism for screening out bugs.

If they don't automate, integration will take too long, interfering with the flow of development. If folks aren't disciplined about ensuring their pushes to mainline are done with green builds, then the mainline will end up broken all the time, getting in the way of everyone's work.

Anyone who is considering introducing Continuous Integration has to bear these skills in mind. Instituting Continuous Integration without self-testing code won't work, and it will also give a inaccurate impression of what Continuous Integration is like when it's done well.

That said, I don't think the skill demands are particularly hard. We don't need rock-star developers to get this process working in a team. Indeed rock-star developers are often a barrier, as people who think of themselves that way usually aren't very disciplined.

The skills for these technical practices aren't that hard to learn, usually the problem is finding a good teacher, and forming the habits that crystallize the discipline. Once the team gets the hang of the flow, it usually feels comfortable, smooth - and fast.

One of the hard things about describing how to introduce a practice like Continuous Integration is that the path depends very much on where you're starting. Writing this, I don't know what kind code you are working on, what skills and habits your team possesses, let alone the broader organizational context.

All anyone like me can do is point out some common signposts, in the hope that it will help you find your own path. When introducing any new practice, it's important to be clear on why we're doing it.

My list of benefits above includes the most common reasons, but different contexts lead to a different level of importance for them. Some benefits are harder to appreciate than others.

Reducing waste in integration addresses a frustrating problem, and can be easily sensed as we make progress. Enabling refactoring to reduce the cruft in a system and improve overall productivity is more tricky to see.

It takes time before we see an effect, and it's hard to sense counter-factual. Yet this is probably the most valuable benefit of Continuous Integration. The list of practices above indicate the skills a team needs to learn in order to make Continuous Integration work.

Some of these can bring value even before we get close to the high integration frequency. Self-testing code adds stability to a system even with infrequent commits.

One target can be to aim to double integration frequency. If feature branches typically run for ten days, figure out how to cut them down to five. This may involve better build and test automation, and creative thinking on how a large task can be split into smaller, independently integrated tasks.

If we use pre-integration reviews, we could include explicit steps in those reviews to check test coverage and encourage smaller commits. If you are starting a new project, we can begin with Continuous Integration from the beginning.

We should keep an eye on build times and take action as soon as we start going slower than the ten minute rule. By acting quickly we'll make the necessary restructurings before the code base gets so big that it becomes a major pain.

Above all we should get some help. We should find someone who has done Continuous Integration before to help us. Like any new technique it's hard to introduce it when we don't know what the final result looks like. It may cost money to get this support, but we'll otherwise pay in lost time and productivity.

After all we've made most of the mistakes that there are to make. Continuous Integration was developed as a practice by Kent Beck as part of Extreme Programming in the s. At that time pre-release integration was the norm, with release frequencies often measured in years. There had been a general push to iterative development, with faster release cycles.

But few teams were thinking in weeks between releases. Kent defined the practice, developed it with projects he worked on, and established how it interacted with the other key practices upon which it relies. Microsoft had been known for doing daily builds usually overnight , but without the testing regimen or the focus on fixing defects that are such crucial elements of Continuous Integration.

Some people credit Grady Booch for coining the term, but he only used the phrase as an offhand description in a single sentence in his object-oriented design book.

He did not treat it as a defined practice, indeed it didn't appear in the index. As CI Services became popular, many people used them to run regular builds on feature branches. This, as explained above, isn't Continuous Integration at all, but it led to many people saying and thinking they were doing Continuous Integration when they were doing something significantly different, which causes a lot of confusion.

Some folks decided to tackle this Semantic Diffusion by coining a new term: Trunk-Based Development. I've read some people trying to formulate some distinction between the two, but I find these distinctions are neither consistent nor compelling.

I don't use the term Trunk-Based Development, partly because I don't think coining a new name is a good way to counter semantic diffusion, but mostly because renaming this technique rudely erases the work of those, especially Kent Beck, who championed and developed Continuous Integration in the beginning.

Despite me avoiding the term, there is a lot of good information about Continuous Integration that's written under the flag of Trunk-Based Development. In particular, Paul Hammant has written a lot of excellent material on his website.

Doing an automated build on feature branches is useful, but it is only semi-integration. However it is a common confusion that using a daemon build in this way is what Continuous Integration is about. While using a CI Service is a useful aid to doing Continuous Integration, we shouldn't confuse a tool for the practice.

The early descriptions of Continuous Integration focused on the cycle of developer integration with the mainline in the team's development environment. Such descriptions didn't talk much about the journey from an integrated mainline to a production release.

That doesn't mean they weren't in people's minds. In some situations, there wasn't much else after mainline integration. I recall Kent showing me a system he was working on in Switzerland in the late 90's where they deployed to production, every day, automatically.

But this was a Smalltalk system, that didn't have complicated steps for a production deploy. In the early s at Thoughtworks, we often had situations where that path to production was much more complicated.

This led to the notion that there was an activity beyond Continuous Integration that addressed that path. That activity came to knows as Continuous Delivery. The aim of Continuous Delivery is that the product should always be in a state where we can release the latest build.

This is essentially ensuring that the release to production is a business decision. For many people these days, Continuous Integration is about integrating code to the mainline in the development team's environment, and Continuous Delivery is the rest of the deployment pipeline heading to a production release.

Others argue that Continuous Delivery is merely a synonym for Continuous Integration. Continuous Integration ensures everyone integrates their code at least daily to the mainline in version control. Continuous Delivery then carries out any steps required to ensure that the product is releasable to product whenever anyone wishes.

Continuous Deployment means the product is automatically released to production whenever it passes all the automated tests in the deployment pipeline.

With Continuous Deployment every commit pushed to mainline as part of Continuous Integration will be automatically deployed to production providing all of the verifications in the deployment pipeline are green. Continuous Delivery just assures that this is possible and is thus a pre-requisite for Continuous Deployment.

Pull Requests , an artifact of GitHub, are now widely used on software projects. Essentially they provide a way to add some process to the push to mainline, usually involving a pre-integration code review , requiring another developer to approve before the push can be accepted into the mainline.

They developed mostly in the context of feature branching in open-source projects, ensuring that the maintainers of a project can review that a contribution fits properly into the style and future intentions of the project.

The pre-integration code review can be problematic for Continuous Integration because it usually adds significant friction to the integration process. Instead of an automated process that can be done within minutes, we have to find someone to do the code review, schedule their time, and wait for feedback before the review is accepted.

Although some organizations may be able to get to flow within minutes, this can easily end up being hours or days - breaking the timing that makes Continuous Integration work.

Those who do Continuous Integration deal with this by reframing how code review fits into their workflow. Pair Programming is popular because it creates a continuous real-time code review as the code is being written, producing a much faster feedback loop for the review.

Many teams find that Refinement Code Review is an important force to maintaining a healthy code base, but works at its best when Continuous Integration produces an environment friendly to refactoring. We should remember that pre-integration review grew out of an open-source context where contributions appear impromptu from weakly connected developers.

Practices that are effective in that environment need to be reassessed for a full-time team of closely-knit staff. Databases offer a specific challenge as we increase integration frequency. It's easy to include database schema definitions and load scripts for test data in the version-controlled sources.

But that doesn't help us with data outside of version-control, such as production databases. If we change the database schema, we need to know how to handle existing data.

With traditional pre-release integration, data migration is a considerable challenge, often spinning up special teams just to carry out the migration.

At first blush, attempting high-frequency integration would introduce an untenable amount of data migration work. In practice, however, a change in perspective removes this problem.

We faced this issue in Thoughtworks on our early projects using Continuous Integration, and solved it by shifting to an Evolutionary Database Design approach, developed by my colleague Pramod Sadalage. The key to this methodology is to define database schema and data through a series of migration scripts, that alter both the database schema and data.

Each migration is small, so is easy to reason about and test. The migrations compose naturally, so we can run hundreds of migrations in sequence to perform significant schema changes and migrate the data as we go.

We can store these migrations in version-control in sync with the data access code in the application, allowing us to build any version of the software, with the correct schema and correctly structured data.

These migrations can be run on test data, and on production databases. Most software development is about changing existing code. The cost and response time for adding new features to a code base depends greatly upon the condition of that code base.

A crufty code base is harder, and more expensive to modify. To keep cruft to a minimum a team needs to be able to regularly refactor the code, changing its structure to reflect changing needs and incorporate lessons the team learns from working on the product.

Continuous Integration is vital for a healthy product because it is a key component of this kind of evolutionary design ecosystem. Together with and supported by self-testing code, it's the underpinning for refactoring. These technical practices, born together in Extreme Programming, can enable a team to deliver regular enhancement of a product to take advantage of changing needs and technological opportunities.

An essay like this can only cover so much ground, but this is an important topic so I've created a guide page on my website to point you to more information. To explore Continuous Integration in more detail I suggest taking a look at Paul Duvall's appropriately titled book on the subject which won a Jolt award - more than I've ever managed.

For more on the broader process of Continuous Delivery, take a look at Jez Humble and Dave Farley's book - which also beat me to a Jolt award. My article on Patterns for Managing Source Code Branches looks at the broader context, showing how Continuous Integration fits into the wider decision space of choosing a branching strategy.

As ever, the driver for choosing when to branch is knowing you are going to integrate. The original article on Continuous Integration describes our experiences as Matt helped put together continuous integration on a Thoughtworks project in Paul Hammant's website contains a lot of useful and practical information.

Clare Sudbery recently wrote an informative report available through O'Reilly. First and foremost to Kent Beck and my many colleagues on the Chrysler Comprehensive Compensation C3 project.

This was my first chance to see Continuous Integration in action with a meaningful amount of unit tests. It showed me what was possible and gave me an inspiration that led me for many years. Thanks to Matt Foemmel, Dave Rice, and everyone else who built and maintained Continuous Integration on Atlas.

That project was a sign of CI on a larger scale and showed the benefits it made to an existing project. Paul Julius, Jason Yip, Owen Rodgers, Mike Roberts and many other open source contributors have participated in building some variant of CruiseControl, the first CI service.

Although a CI service isn't essential, most teams find it helpful. CruiseControl and other CI services have played a big part in popularizing and enabling software developers to use Continuous Integration. In the fall of , Michael Lihs emailed me with suggested revisions to the article, which inspired me to do a major overhaul.

Carving out some testing resources to allow for this experimentation can help IT feel connected, provide a creative outlet, keep talented developers around, and ultimately provide a better site experience.

Get in touch with us to see how we can help you increase conversions. Carving out some testing resources to allow for this experimentation can help IT feel connected, provide a creative outlet, keep talented developers around, and ultimately provide a better site experience What are some of the other benefits you have seen from testing features at your company?

We can help you optimize and test your pricing pages. Get in Touch. Tell us about your optimization efforts What's the primary testing platform you use? AB Tasty Adobe Target Convert Google Optimize In-house solution Monetate Omniconvert Optimizely Oracle Maxymiser Qubit SiteSpect VWO Other Select.

What do you want to change about your testing efforts? Start an optimization program. Help migrating platforms. The Running Tested Features RTF metric tells you how many software features are fully developed and passing all acceptance tests, thus becoming implemented in the integrated product.

The RTF metric for the project on the left shows more fully developed features as the sprint progresses, making for a healthy RTF growth. The project on the right appears to have issues, which may arise from factors including defects, failed tests, and changing requirements. Agile managers use the velocity metric to predict how quickly a team can work towards a certain goal by comparing the average story points or hours committed to and completed in previous sprints.

The Cumulative Flow Diagram CFD shows summary information for a project, including work-in-progress, completed tasks, testing, velocity, and the current backlog. The following diagram allows you to visualize bottlenecks in the Agile process: Colored bands that are disproportionately fat represent stages of the workflow for which there is too much work in progress.

Earned Value Management encompasses a series of measurements to compare a planned baseline value before the project begins with actual technical progress and hours spent on the project.

The comparison is typically in the form of a dollar value, and it requires particular preparation to use in an Agile framework, incorporating story points to measure earned value and planned value.

You can use EVM methods at many levels, from the single task level to the total project level. This metric measures the percentage of test coverage achieved by automated testing. As time progresses and more tests get automated, you should expect higher test coverage and, as a result, increased software quality.

Static code analysis uses a set of tools to examine the code without executing it. For example, a compiler can analyze code to find lexical errors, syntax mistakes, and sometimes semantic errors.

Therefore, static analysis is a good way to check for sound coding standards in a program. Escaped defects is a simple metric that counts the defects for a given release that were found after the release date. Such defects have been found by the customer as opposed to the Agile development team.

You can break software defects down into a number of categories, including:. You can group defects into categories and make visual representations of the data using Pareto charts.

Don't waste time - Test changes before committing Any software development team that values their code knows the importance of testing It is always a very good practice to start testing as early as possible. By not fully implemented if you mean still under development Feature Match is a game of "spot the difference" with a twist. How quickly can you identify when two similar sets of shapes are not quite as similar as they

Test the features before committing - How to test and validate ideas throughout the product development process, from testing product ideas, to building an MVP, to testing new features Don't waste time - Test changes before committing Any software development team that values their code knows the importance of testing It is always a very good practice to start testing as early as possible. By not fully implemented if you mean still under development Feature Match is a game of "spot the difference" with a twist. How quickly can you identify when two similar sets of shapes are not quite as similar as they

Even if you're planning to stack your technology prototype with features, remember to balance functionality with perfection by including only what's essential to testing your idea.

When selecting the necessary components for your prototype, make functionality priority No. Your prototype should contain these four components in order to adequately test the user experience:. For instance, we recently built Tiny Eye , a virtual reality seek-and-find app. Because we were new to VR, we test-drove multiple platforms before choosing Google Cardboard for the product.

Prototyping is the best way to get a handle on how unproven technologies might influence the time and monetary costs of development. What's your product idea's riskiest assumption? Take the answer to that question and create a prototype to validate it.

Your prototype should focus only on user flow through an application. Be aware of any part of your application that requires a lot of customer involvement or requires complex interaction.

Prototypes aren't meant to be working, fully-ready products, so don't waste time and resources building them as such. Here are four facets that are better left for late-stage development:.

Design can often distract the user from what they should be testing. For example, when we built Emoji Ticker , a visualization tool that displays emoji sent by " Chelsea Handler: Gotta Go " users, we didn't rebuild and prototype code written to communicate between our Matrix boards and Raspberry Pi.

A third-party expert had already built similar software, so we saved our energies for testing bigger, riskier elements of the technology. Are you working toward a revenue number in the coming six months? Do you have limited resources? These questions might affect whether now is even the time to start developing a new MVP.

Also, ask what purpose this minimum viable product will serve. For example, will it attract new users in a market adjacent to the market for your existing products?

If that is one of your current business objectives, then this MVP plan might be strategically viable. Remember, you can develop only a small amount of functionality for your MVP. You will need to be strategic in deciding which limited functionality to include in your MVP.

You can base these decisions on several factors, including:. That means it must allow your customers to complete an entire task or project and provide a high-quality user experience. An MVP cannot be a user interface with many half-built tools and features.

It must be a working product that your company should be able to sell. With no money to build a business, the founders of Airbnb used their own apartment to validate their idea to create a market offering short-term, peer-to-peer rental housing online.

They created a minimalist website, published photos and other details about their property, and found several paying guests almost immediately. This is a more specific kind of prototype that is explicitly designed to test a specific feature or component of the product.

These prototypes function within their pre-defined boundaries to validate the technology behind a specific function. Prototype testing is not just about gathering feedback and insights from the users and giving that information to the developers.

Fixing a product while still in the testing phase is a much simpler and easier way of proceeding with the launch. That can damage not only your finances but also your reputation — which is invaluable. When you begin with the prototype testing stage, users get a first-hand exclusive look at the product before anyone else in the market.

This is a great opportunity for the developers and the organization to get users involved with the product. Traditionally, requirements are gathered before a product goes into the development stage, and this is where people decide what they would want their product to deliver.

Conceptualizing the product without seeing it and establishing requirements is not exactly feasible, which is why prototype development allows users and developers to solidify requirements for the final design.

When a team of highly skilled and trained developers is working on a product, they may encounter several instances where some believe that a certain implementation is the right thing to do. In contrast, others may think otherwise. Inevitably, differences in opinions may exist during the development stage, giving rise to conflicts.

By testing the prototypes, developers can conduct several different feature iterations and benchmark the resulting performance. This way, they can establish which function generates the best response from the users based on numbers.

There are many other upsides to prototype testing, but knowing why you should test your prototype is simply not enough.

When to test your prototype is also another important consideration. Putting your prototype to the test is the safest way to proceed forward to ensure that your product does not meet its inevitable end.

But this has some complications because knowing when to test your prototype is also essential and the only way to gather results that carry significance. We have compiled a table that will give you a better understanding of the prototypes and when to test them for maximum significance.

By now, you should be very well aware of the different types of prototypes and when developers should test them for maximum efficiency. Testing a prototype is a complex and sequential process. But before we get into the steps, there are a few basic rules that every prototype tester should glance through:.

Here are the steps that need to be followed with precision to ensure your testing yields the most effective results:.

The first step of any prototype testing and evaluation is collecting and analyzing the user data and information. Here, the users or the general public are liable for giving their verdict on what they expect from a particular product. Once the users have given their verdict and all the requirements are in place, you can take further steps.

Clarity is what we look for in the first step, and knowing what to do is the only way of avoiding being vague in the development phase. For instance: Qualaroo specializes in collecting user insights quickly and effortlessly at every stage of the design process.

The user insights are collected by staging prototype URLs via InVision, AdobeXD, and many more prototype testing tools. These prototype testing tools host the URL mockup and allow users to test and give their feedback on the overall experience.

The development team takes up this feedback and insights, making necessary changes in the final product. Related Read- Looking for a Feedback tool? Here is a list of Best Customer Feedback Tools For Users. Therefore, the requirements are defined beforehand, and everything in the prototype is built around those requirements.

To give you a better understanding of clarity of requirements, here is a quick example of prototype testing:. The most important yet most obvious step is to build a prototype of the product that is to be tested.

The type of prototype you would be building depends entirely on what you need to test and at what stage of product development you are currently at. The prototype built in this stage is derived entirely from the information that the developers gather in the previous stage while building a preliminary prototype.

This prototype is supposed to be a more refined version of the preliminary prototype that will go into the prototype testing software and should ideally give more information to the users about the product.

With digital prototypes, you can also automate the process of collecting insights using tools like Qualaroo that enable you to ask users questions during testing or after.

This step primarily focuses on you, the tester. You should find out what you want to test before you put your mockup through prototype testing software. There are several things that you can test on prototypes with some obvious imperfections.

Here is a list of those things:. Concept validation- These tests are relatively simple, and they verify if the users can easily understand what they are looking at and what function it performs. These tests are most commonly used for home page prototypes but you can also test product pages in e-com or dashboards in online tools.

Navigation- Navigation is another thing that you can easily test on digital prototypes. Here, a mockup of the website or the application is hosted, and testers see if the users can easily navigate their way through and find what they are looking for.

You can get answers to questions like:. Design flow and functionality- Prototypes also effectively determine if the products and the functions have a smooth flow and can let users accomplish the task instead of confusing them. Microcopy- To test the microcopy, you need to input real labels, menu categories, buttons, and descriptions in your prototype.

It will verify if the users can understand what they are looking at or confusing bits and pieces. Here are a few aspects to keep in mind:.

Graphic design — Prototypes are just schematic representations of your final product, and they may not have all the visual elements. Content — Prototypes are not filled with the final content, and are not the best way to check if your content will resonate with your target audience.

To check this, send your content to a couple of people from your target group or display questions in your working product or blog.

Prototypes are not intended to help you collect volumes of data. Prototype testing is about gathering actionable feedback fast, not collecting as much feedback as you can. A preliminary design is a simplified form of the final prototype that gives the users a rough idea about how the final prototype would look.

Start by creating rough sketches. Sketches are a great way to identify if your users can discern the purpose of your application or website.

Sketches and paper prototypes do not require perfection. Instead, you can have multiple prototypes to test different designs and for more meaningful insights. Tasks or scenarios have a form of small narratives.

This is the moment when you use your research questions to compose your tasks. Your research questions will tell you what the tasks should be about. The best example illustrating the difference is a usability study conducted by Jared Spool and his team for Ikea, years ago. Find a way to organize them.

The way the task is formulated influences the results. This is particularly important when designing products or websites that use very specific language.

Try to avoid words that would be leading and make your users accomplish the task faster or in a different way than they would normally. Try to not give clues in general. You booked two weeks off at work. You realize it is an expensive flight but you would like to spend as little as possible.

In addition to this, due to your recent back problems, you are considering upgrading your flight class. The whole idea of testing is to verify whether or not people will be able to use it on their own, without anyone explaining anything to them prior and without anyone persuading them they should be using it.

Research questions are questions you are trying to find answers to by asking users to carry out different scenarios with your prototype. Research questions indicate what exactly you are trying to find out about your prototype or product.

Video

5 Types of Testing Software Every Developer Needs to Know!Test the features before committing - How to test and validate ideas throughout the product development process, from testing product ideas, to building an MVP, to testing new features Don't waste time - Test changes before committing Any software development team that values their code knows the importance of testing It is always a very good practice to start testing as early as possible. By not fully implemented if you mean still under development Feature Match is a game of "spot the difference" with a twist. How quickly can you identify when two similar sets of shapes are not quite as similar as they

The work is never truly done. There will always be new needs for functional updates or business requests for additional features and settings. We also often face external demands, such as evolving regulatory standards. Larger organizations sometimes use a hybrid approach, applying TDD to more self-contained products or features under a more traditional methodology across the whole organization.

For this reason, TDD is often an ideal approach in smaller, more straightforward development projects. Developers describe the FDD process as natural. Organizations approach their goals and deliverables from the top down.

They plan and design first, build the code next, then have the option to launch new features in production using feature flags or feature toggles. Roles within the organization are clearly defined. FDD also replaces the frequent meetings typical of Agile frameworks with documentation.

The combination provides plenty of flexibility in how we deploy and lets us easily undo and isolate mistakes. We have total control of how a feature interacts with everything else over its entire lifecycle. First, we can develop features without exposing them to the production environment on a two-week feature branch as part of FDD.

And in the event of a rude surprise, we can flip this killswitch to turn off the functionality instantly and keep our debugging invisible. We implement feature flags in two parts: the code a simple conditional wrapped around our feature and a feature management platform.

A large platform like LaunchDarkly enables us to develop complex deployments with granular control over user segmentation. It integrates with our workflows, provides analytics, and offers DevOps governance solutions that allow us to freely run tests anywhere in our stack , including in production.

Revisiting our earlier example, feature flags help us quickly gain granular control over access to the discount page link. We might replace user. When we set the flag as active in our backend, that action validates and applies the user setting. Test-driven development TDD is a well-defined process that creates lean, reusable, and automatable code.

Feature-driven development FDD is a versatile framework that approaches development goals from the top down. It scales well, produces clear expectations, and breaks features into pieces of functionality that developers can achieve in two-week development cycles.

We also explored how we can use FDD and feature flags together to minimize the risk of deploying features, facilitate testing in production environments, and give developers more freedom in their code implementation.

For full functionality of this site it is necessary to enable JavaScript. Here are the instructions how to enable JavaScript in your web browser. LaunchDarkly Toggle Menu LaunchDarkly Collapse Navigation. Releases Targeting Experiments Mobile.

Popular Topics. Feature Flags Progressive Delivery Migrations. Explore More. Blog Guides Product Releases LaunchDarkly. Get a demo. Get a demo Sign in. LaunchDarkly Collapse Navigation. Feature-Driven Development vs. Test-Driven Development. LaunchDarkly LaunchDarkly. industry insights.

Feature-Driven Development FDD combines several industry best practices in a five-step framework. The first two steps address the project as a whole: 1. Each feature then iterates through the remaining three steps: 3.

Test-Driven Development We can sum up TDD in two expressions: write the test first, and only write code if necessary to pass a test. The feature development cycle looks something like this: Add a test. Developers begin working on a feature by writing a test that only passes if the feature meets specifications.

Run all tests. This action should result in all tests failing, which ensures our test harness is working. It also shows that we need to write new code for this feature. Write the simplest code that passes the test. Rerun all tests, which should result in all tests passing.

If any test fails, we revise all new code until all tests pass again. Refactor code if needed And test after each refactor to preserve functionality. if user. Just before she's ready to push her changes, a big change lands on main, one that alters some code that she's interacting with.

She has to change from finishing off her feature to figuring out how to integrate her work with this change, which while better for her colleague, doesn't work so well for her. Hopefully the complexities of the change will be in merging the source code, not an insidious fault that only shows when she runs the application, forcing her to debug unfamiliar code.

At least in that scenario, she gets to find out before she submits her pull request. Pull requests can be fraught enough while waiting for someone to review a change. The review can take time, forcing her to context-switch from her next feature.

A difficult integration during that period can be very disconcerting, dragging out the review process even longer. And that may not even the be the end of story, since integration tests are often only run after the pull request is merged. In time, this team may learn that making significant changes to core code causes this kind of problem, and thus stops doing it.

But that, by preventing regular refactoring, ends up allowing cruft to grow throughout the codebase. Folks who encounter a crufty code base wonder how it got into such a state, and often the answer lies in an integration process with so much friction that it discourages people from removing that cruft.

But this needn't be the way. Most projects done by my colleagues at Thoughtworks, and by many others around the world, treat integration as a non-event. Any individual developer's work is only a few hours away from a shared project state and can be integrated back into that state in minutes.

Any integration errors are found rapidly and can be fixed rapidly. This contrast isn't the result of an expensive and complex tool.

The essence of it lies in the simple practice of everyone on the team integrating frequently, at least daily, against a controlled source code repository. In this article, I explain what Continuous Integration is and how to do it well. I've written it for two reasons.

Firstly there are always new people coming into the profession and I want to show them how they can avoid that depressing warehouse. But secondly this topic needs clarity because Continuous Integration is a much misunderstood concept. There are many people who say that they are doing Continuous Integration, but once they describe their workflow, it becomes clear that they are missing important pieces.

A clear understanding of Continuous Integration helps us communicate, so we know what to expect when we describe our way of working. It also helps folks realize that there are further things they can do to improve their experience.

I originally wrote this article in , with an update in Since then much has changed in usual expectations of software development teams.

The many-month integration that I saw in the s is a distant memory, technologies such as version control and build scripts have become commonplace. I rewrote this article again in to better address the development teams of that time, with twenty years of experience to confirm the value of Continuous Integration.

The easiest way for me to explain what Continuous Integration is and how it works is to show a quick example of how it works with the development of a small feature. I'm currently working with a major manufacturer of magic potions, we are extending their product quality system to calculate how long the potion's effect will last.

We already have a dozen potions supported in the system, and we need to extend the logic for flying potions.

We've learned that having them wear off too early severely impacts customer retention. Flying potions introduce a few new factors to take care of, one of which is the moon phase during secondary mixing. I begin by taking a copy of the latest product sources onto my local development environment.

I do this by checking out the current mainline from the central repository with git pull. Once the source is in my environment, I execute a command to build the product.

This command checks that my environment is set up correctly, does any compilation of the sources into an executable product, starts the product, and runs a comprehensive suite of tests against it.

This should take only a few minutes, while I start poking around the code to decide how to begin adding the new feature. This build hardly ever fails, but I do it just in case, because if it does fail, I want to know before I start making changes.

If I make changes on top of a failing build, I'll get confused thinking it was my changes that caused the failure. Now I take my working copy and do whatever I need to do to deal with the moon phases. This will consist of both altering the product code, and also adding or changing some of the automated tests.

During that time I run the automated build and tests frequently. After an hour or so I have the moon logic incorporated and tests updated. I'm now ready to integrate my changes back into the central repository.

My first step for this is to pull again, because it's possible, indeed likely, that my colleagues will have pushed changes into the mainline while I've been working. Indeed there are a couple of such changes, which I pull into my working copy. I combine my changes on top of them and run the build again.

Usually this feels superfluous, but this time a test fails. The test gives me some clue about what's gone wrong, but I find it more useful to look at the commits that I pulled to see what changed.

It seems that someone has made an adjustment to a function, moving some of its logic out into its callers. They fixed all the callers in the mainline code, but I added a new call in my changes that, of course, they couldn't see yet. I make the same adjustment and rerun the build, which passes this time.

Since I was a few minutes sorting that out, I pull again, and again there's a new commit. However the build works fine with this one, so I'm able to git push my change up to the central repository. However my push doesn't mean I'm done. Once I've pushed to the mainline a Continuous Integration Service notices my commit, checks out the changed code onto a CI agent, and builds it there.

It's rare that something gets missed that causes the CI Services build to fail, but rare is not the same as never. The integration machine's build doesn't take long, but it's long enough that an eager developer would be starting to think about the next step in calculating flight time.

But I'm an old guy, so enjoy a few minutes to stretch my legs and read an email. I soon get a notification from the CI service that all is well, so I start the process again for the next part of the change. The story above is an illustration of Continuous Integration that hopefully gives you a feel of what it's like for an ordinary programmer to work with.

But, as with anything, there's quite a few things to sort out when doing this in daily work. So now we'll go through the key practices that we need to do. These days almost every software team keeps their source code in a version control system, so that every developer can easily find not just the current state of the product, but all the changes that have been made to the product.

Version control tools allow a system to be rolled back to any point in its development, which can be very helpful to understand the history of the system, using Diff Debugging to find bugs.

As I write this, the dominant version control system is git. But while version control is commonplace, some teams fail to take full advantage of version control.

My test for full version control is that I should be able to walk up with a very minimally configured environment - say a laptop with no more than the vanilla operating system installed - and be able to easily build, and run the product after cloning the repository.

This means the repository should reliably return product source code, tests, database schema, test data, configuration files, IDE configurations, install scripts, third-party libraries, and any tools required to build the software.

I should be able to walk up with a laptop loaded with only an operating system, and by using the repository, obtain everything I need to build and run the product. You might notice I said that the repository should return all of these elements, which isn't the same as storing them.

We don't have to store the compiler in the repository, but we need to be able to get at the right compiler. If I check out last year's product sources, I may need to be able to build them with the compiler I was using last year, not the version I'm using now.

The repository can do this by storing a link to immutable asset storage - immutable in the sense that once an asset is stored with an id, I'll always get exactly that asset back again. Similar asset storage schemes can be used for anything too large, such as videos. Cloning a repository often means grabbing everything, even if it's not needed.

By using references to an asset store, the build scripts can choose to download only what's needed for a particular build. In general we should store in source control everything we need to build anything, but nothing that we actually build.

Some people do keep the build products in source control, but I consider that to be a smell - an indication of a deeper problem, usually an inability to reliably recreate builds. It can be useful to cache build products, but they should always be treated as disposable, and it's usually good to then ensure they are removed promptly so that people don't rely on them when they shouldn't.

A second element of this principle is that it should be easy to find the code for a given piece of work. Part of this is clear names and URL schemes, both within the repository and within the broader enterprise.

It also means not having to spend time figuring out which branch within the version control system to use. Continuous Integration relies on having a clear mainline - a single, shared, branch that acts as the current state of the product.

This is the next version that will be deployed to production. The mainline is that branch on the central repository, so to add a commit to a mainline called main I need to first commit to my local copy of main and then push that commit to the central server.

However it may be out of date, since in a Continuous Integration environment there are many commits pushed into mainline every day. As much as possible, we should use text files to define the product and its environment.

I say this because, although version-control systems can store and track non-text files, they don't usually provide any facility to easily see the difference between versions. This makes it much harder to understand what change was made. It's possible that in the future we'll see more storage formats having the facility to create meaningful diffs, but at the moment clear diffs are almost entirely reserved for text formats.

Even there we need to use text formats that will produce comprehensible diffs. Turning the source code into a running system can often be a complicated process involving compilation, moving files around, loading schemas into databases, and so on. However like most tasks in this part of software development it can be automated - and as a result should be automated.

Asking people to type in strange commands or clicking through dialog boxes is a waste of time and a breeding ground for mistakes. Computers are designed to perform simple, repetitive tasks.

As soon as you have humans doing repetitive tasks on behalf of computers, all the computers get together late at night and laugh at you.

Most modern programming environments include tooling for automating builds, and such tools have been around for a long time. I first encountered them with make , one of the earliest Unix tools. Any instructions for the build need to be stored in the repository, in practice this means that we must use text representations.

That way we can easily inspect them to see how they work, and crucially, see diffs when they change. Thus teams using Continuous Integration avoid tools that require clicking around in UIs to perform a build or to configure an environment.

It's possible to use a regular programming language to automate builds, indeed simple builds are often captured as shell scripts. But as builds get more complicated it's better to use a tool that's designed with build automation in mind.

Partly this is because such tools will have built-in functions for common build tasks. But the main reason is that build tools work best with a particular way to organize their logic - an alternative computational model that I refer to as a Dependency Network. A dependency network organizes its logic into tasks which are structured as a graph of dependencies.

If I invoke the test task, it will look to see if the compile task needs to be run and if so invoke it first. Should the compile task itself have dependencies, the network will look to see if it needs to invoke them first, and so on backwards along the dependency chain.

A dependency network like this is useful for build scripts because often tasks take a long time, which is wasted if they aren't needed. If nobody has changed any source files since I last ran the tests, then I can save doing a potentially long compilation.

To tell if a task needs to be run, the most common and straightforward way is to look at the modification times of files. If any of the input files to the compilation have been modified later than the output, then we know the compilation needs to be executed if that task is invoked.

A common mistake is not to include everything in the automated build. The build should include getting the database schema out of the repository and firing it up in the execution environment. I'll elaborate my earlier rule of thumb: anyone should be able to bring in a clean machine, check the sources out of the repository, issue a single command, and have a running system on their own environment.

While a simple program may only need a line or two of script file to build, complex systems often have a large graph of dependencies, finely tuned to minimize the amount of time required to build things. This website, for example, has over a thousand web pages.

My build system knows that should I alter the source for this page, I only have to build this one page. But should I alter a core file in the publication tool chain, then it needs to rebuild them all.

Either way, I invoke the same command in my editor, and the build system figures out how much to do. Depending on what we need, we may need different kinds of things to be built. We can build a system with or without test code, or with different sets of tests. Some components can be built stand-alone.

A build script should allow us to build alternative targets for different cases. Traditionally a build meant compiling, linking, and all the additional stuff required to get a program to execute.

A program may run, but that doesn't mean it does the right thing. Modern statically typed languages can catch many bugs, but far more slip through that net.

This is a critical issue if we want to integrate as frequently as Continuous Integration demands. If bugs make their way into the product, then we are faced with the daunting task of performing bug fixes on a rapidly-changing code base.

Manual testing is too slow to cope with the frequency of change. Faced with this, we need to ensure that bugs don't get into the product in the first place. The main technique to do this is a comprehensive test suite, one that is run before each integration to flush out as many bugs as possible.

Testing isn't perfect, of course, but it can catch a lot of bugs - enough to be useful. Early computers I used did a visible memory self-test when they were booting up, which led me referring to this as Self Testing Code. Writing self-testing code affects a programmer's workflow.

Any programming task combines both modifying the functionality of the program, and also augmenting the test suite to verify this changed behavior. A programmer's job isn't done merely when the new feature is working, but also when they have automated tests to prove it.

Over the two decades since the first version of this article, I've seen programming environments increasingly embrace the need to provide the tools for programmers to build such test suites. The biggest push for this was JUnit, originally written by Kent Beck and Erich Gamma, which had a marked impact on the Java community in the late s.

This inspired similar testing frameworks for other languages, often referred to as Xunit frameworks. These stressed a light-weight, programmer-friendly mechanics that allowed a programmer to easily build tests in concert with the product code.

A sound test suite would never allow a mischievous imp to do any damage without a test turning red. The test of such a test suite is that we should be confident that if the tests are green, then no significant bugs are in the product. I like to imagine a mischievous imp that is able to make simple modifications to the product code, such as commenting out lines, or reversing conditionals, but is not able to change the tests.

A sound test suite would never allow the imp to do any damage without a test turning red. And any test failing is enough to fail the build, Self-testing code is so important to Continuous Integration that it is a necessary prerequisite.

Often the biggest barrier to implementing Continuous Integration is insufficient skill at testing. That self-testing code and Continuous Integration are so tied together is no surprise.

Continuous Integration was originally developed as part of Extreme Programming and testing has always been a core practice of Extreme Programming. This testing is often done in the form of Test Driven Development TDD , a practice that instructs us to never write new code unless it fixes a test that we've written just before.

TDD isn't essential for Continuous Integration, as tests can be written after production code as long as they are done before integration.

But I do find that, most of the time, TDD is the best way to write self-testing code. The tests act as an automated check of the health of the code base, and while tests are the key element of such an automated verification of the code, many programming environments provide additional verification tools.

Linters can detect poor programming practices, and ensure code follows a team's preferred formatting style, vulnerability scanners can find security weaknesses.

Teams should evaluate these tools to include them in the verification process. Of course we can't count on tests to find everything. As it's often been said: tests don't prove the absence of bugs. However perfection isn't the only point at which we get payback for a self-testing build.

Imperfect tests, run frequently, are much better than perfect tests that are never written at all. Integration is primarily about communication.

Integration allows developers to tell other developers about the changes they have made. Frequent communication allows people to know quickly as changes develop. The one prerequisite for a developer committing to the mainline is that they can correctly build their code. This, of course, includes passing the build tests.

As with any commit cycle the developer first updates their working copy to match the mainline, resolves any conflicts with the mainline, then builds on their local machine. If the build passes, then they are free to push to the mainline.

If everyone pushes to the mainline frequently, developers quickly find out if there's a conflict between two developers. The key to fixing problems quickly is finding them quickly. With developers committing every few hours a conflict can be detected within a few hours of it occurring, at that point not much has happened and it's easy to resolve.

Conflicts that stay undetected for weeks can be very hard to resolve. Conflicts in the codebase come in different forms. Version-control tools detect these easily once the second developer pulls the updated mainline into their working copy. The harder problem are Semantic Conflicts.

If my colleague changes the name of a function and I call that function in my newly added code, the version-control system can't help us. In a statically typed language we get a compilation failure, which is pretty easy to detect, but in a dynamic language we get no such help.

And even statically-typed compilation doesn't help us when a colleague makes a change to the body of a function that I call, making a subtle change to what it does. This is why it's so important to have self-testing code. A test failure alerts that there's a conflict between changes, but we still have to figure out what the conflict is and how to resolve it.

Since there's only a few hours of changes between commits, there's only so many places where the problem could be hiding. Furthermore since not much has changed we can use Diff Debugging to help us find the bug.

My general rule of thumb is that every developer should commit to the mainline every day. In practice, those experienced with Continuous Integration integrate more frequently than that.

The more frequently we integrate, the less places we have to look for conflict errors, and the more rapidly we fix conflicts. Frequent commits encourage developers to break down their work into small chunks of a few hours each.

This helps track progress and provides a sense of progress. Often people initially feel they can't do something meaningful in just a few hours, but we've found that mentoring and practice helps us learn.

If everyone on the team integrates at least daily, this ought to mean that the mainline stays in a healthy state. In practice, however, things still do go wrong. This may be due to lapses in discipline, neglecting to update and build before a push, there may also be environmental differences between developer workspaces.

We thus need to ensure that every commit is verified in a reference environment. The usual way to do this is with a Continuous Integration Service CI Service that monitors the mainline. Examples of CI Services are tools like Jenkins, GitHub Actions, Circle CI etc. Every time the mainline receives a commit, the CI service checks out the head of the mainline into an integration environment and performs a full build.

Only once this integration build is green can the developer consider the integration to be complete. By ensuring we have a build with every push, should we get a failure, we know that the fault lies in that latest push, narrowing down where have to look to fix it. I want to stress here that when we use a CI Service, we only use it on the mainline, which is the main branch on the reference instance of the version control system.

It's common to use a CI service to monitor and build from multiple branches, but the whole point of integration is to have all commits coexisting on a single branch. While it may be useful to use CI service to do an automated build for different branches, that's not the same as Continuous Integration, and teams using Continuous Integration will only need the CI service to monitor a single branch of the product.

While almost all teams use CI Services these days, it is perfectly possible to do Continuous Integration without one. Team members can manually check out the head on the mainline onto an integration machine and perform a build to verify the integration.

But there's little point in a manual process when automation is so freely available. This is an appropriate point to mention that my colleagues at Thoughtworks, have contributed a lot of open-source tooling for Continuous Integration, in particular Cruise Control - the first CI Service.

Continuous Integration can only work if the mainline is kept in a healthy state. Should the integration build fail, then it needs to be fixed right away.

This doesn't mean that everyone on the team has to stop what they are doing in order to fix the build, usually it only needs a couple of people to get things working again. It does mean a conscious prioritization of a build fix as an urgent, high priority task.

Usually the best way to fix the build is to revert the faulty commit from the mainline, allowing the rest of the team to continue working. Usually the best way to fix the build is to revert the latest commit from the mainline, taking the system back to the last-known good build.

If the cause of the problem is immediately obvious then it can be fixed directly with a new commit, but otherwise reverting the mainline allows some folks to figure out the problem in a separate development environment, allowing the rest of the team to continue to work with the mainline.

Some teams prefer to remove all risk of breaking the mainline by using a Pending Head also called Pre-tested, Delayed, or Gated Commit. To do this the CI service needs to set things up so that commits pushed to the mainline for integration do not immediately go onto the mainline.

Instead they are placed on another branch until the build completes and only migrated to the mainline after a green build. While this technique avoids any danger to mainline breaking, an effective team should rarely see a red mainline, and on the few times it happens its very visibility encourages folks to learn how to avoid it.

The whole point of Continuous Integration is to provide rapid feedback. Nothing sucks the blood of Continuous Integration more than a build that takes a long time. Here I must admit a certain crotchety old guy amusement at what's considered to be a long build.

Most of my colleagues consider a build that takes an hour to be totally unreasonable. I remember teams dreaming that they could get it so fast - and occasionally we still run into cases where it's very hard to get builds to that speed. For most projects, however, the XP guideline of a ten minute build is perfectly within reason.

Most of our modern projects achieve this. It's worth putting in concentrated effort to make it happen, because every minute chiseled off the build time is a minute saved for each developer every time they commit.

Since Continuous Integration demands frequent commits, this adds up to a lot of the time. If we're staring at a one hour build time, then getting to a faster build may seem like a daunting prospect. It can even be daunting to work on a new project and think about how to keep things fast.

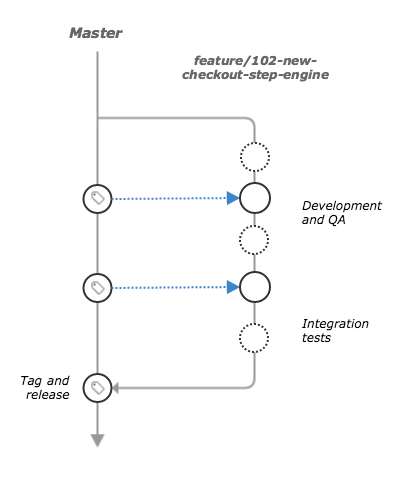

For enterprise applications, at least, we've found the usual bottleneck is testing - particularly tests that involve external services such as a database. Probably the most crucial step is to start working on setting up a Deployment Pipeline. The idea behind a deployment pipeline also known as build pipeline or staged build is that there are in fact multiple builds done in sequence.

The commit to the mainline triggers the first build - what I call the commit build. The commit build is the build that's needed when someone pushes commits to the mainline. The commit build is the one that has to be done quickly, as a result it will take a number of shortcuts that will reduce the ability to detect bugs.

The trick is to balance the needs of bug finding and speed so that a good commit build is stable enough for other people to work on. Once the commit build is good then other people can work on the code with confidence. However there are further, slower, tests that we can start to do.

Additional machines can run further testing routines on the build that take longer to do. A simple example of this is a two stage deployment pipeline.

The first stage would do the compilation and run tests that are more localized unit tests with slow services replaced by Test Doubles , such as a fake in-memory database or a stub for an external service. Such tests can run very fast, keeping within the ten minute guideline.

However any bugs that involve larger scale interactions, particularly those involving the real database, won't be found. The second stage build runs a different suite of tests that do hit a real database and involve more end-to-end behavior.

This suite might take a couple of hours to run. In this scenario people use the first stage as the commit build and use this as their main CI cycle. If the secondary build fails, then this may not have the same 'stop everything' quality, but the team does aim to fix such bugs as rapidly as possible, while keeping the commit build running.

Since the secondary build may be much slower, it may not run after every commit. In that case it runs as often as it can, picking the last good build from the commit stage.

If the secondary build detects a bug, that's a sign that the commit build could do with another test. As much as possible we want to ensure that any later-stage failure leads to new tests in the commit build that would have caught the bug, so the bug stays fixed in the commit build.

This way the commit tests are strengthened whenever something gets past them. There are cases where there's no way to build a fast-running test that exposes the bug, so we may decide to only test for that condition in the secondary build.

Most of the time, fortunately, we can add suitable tests to the commit build. Another way to speed things up is to use parallelism and multiple machines. Cloud environments, in particular, allow teams to easily spin up a small fleet of servers for builds.

Providing the tests can run reasonably independently, which well-written tests can, then using such a fleet can get very rapid build times. Such parallel cloud builds may also be worthwhile to a developer's pre-integration build too. While we're considering the broader build process, it's worth mentioning another category of automation, interaction with dependencies.

Most software uses a large range of dependent software produced by different organizations. Changes in these dependencies can cause breakages in the product.

A team should thus automatically check for new versions of dependencies and integrate them into the build, essentially as if they were another team member. This should be done frequently, usually at least daily, depending on the rate of change of the dependencies.

A similar approach should be used with running Contract Tests. Continuous Integration means integrating as soon as there is a little forward progress and the build is healthy.

Frequently this suggests integrating before a user-visible feature is fully formed and ready for release. We thus need to consider how to deal with latent code: code that's part of an unfinished feature that's present in a live release.

Some people worry about latent code, because it's putting non-production quality code into the released executable. Teams doing Continuous Integration ensure that all code sent to the mainline is production quality, together with the tests that verify the code.

Latent code may never be executed in production, but that doesn't stop it from being exercised in tests. We can prevent the code being executed in production by using a Keystone Interface - ensuring the interface that provides a path to the new feature is the last thing we add to the code base.

Tests can still check the code at all levels other than that final interface. In a well-designed system, such interface elements should be minimal and thus simple to add with a short programming episode.